A Changing Landscape in K–12 Assessment

Step into any American classroom and you’ll notice a shift – there is a changing of the guard taking place within the pedagogy of daily instruction. The old routines of essay assessment, long treated as immutable, now wobble under the weight of new demands: accountability, data-driven instruction, and the unrelenting need to support teachers already stretched thin. It is ubiquitous throughout education. Similarly, administrators who once believed reform alone could solve grading inefficiencies now recognize the limits of human stamina. Teachers—particularly those in English, history, and science—still shoulder hours of handwritten feedback, even after a decade of digital “transformation.” The bottleneck persists.

One can’t help but ask a slightly subversive question: What if grading were not an ordeal at all? What if it served as a conduit—linking feedback, instruction, and actual student growth with something resembling coherence?

Essay Eye, an AI essay feedback and scoring platform for schools, was built to probe precisely that query. Its mission sounds almost disarmingly simple: equip educators with the means to deliver precise, consistent, high-quality feedback at scale—without dulling the human edge that makes authentic teaching what it is. There is no substitute for an highly effective teacher in the classroom. For administrators seeking district-wide writing assessment tools, the implications stretch far beyond convenience: higher morale, more consistent instruction, and, at last, data that anchors writing improvement to measurable outcomes.

The Grading Challenge in K–12 Education

Administrators know the truth even when no one is saying it aloud: grading steals time from the very work students need most. And the blatant truth surrounding writing assignments remains constant – teachers do not assign essay papers due to the daunting tasks that lie just around the corner – the GRADING process. The consequences of avoidance when assigning students practice in one of life’s most essential skills reverberate well beyond professional development plans, instructional pacing, and student confidence into the halls of businesses and places of employment coast to coast.

The Weight of Feedback Fatigue

Here is the skinny on writing assessment for those dutiful educators actually pursuing their craft with integrity. Teachers routinely track their grading hours informally—often with a grimace. In writing-heavy units, it isn’t uncommon for feedback-related tasks to consume 10, 15, or even 20 hours of personal time in a single stretch. Some teachers describe feeling perpetually “behind the next unit,” caught in a loop where late feedback dulls its impact the moment it does not arrive in a timely manner – students have already moved on from that last unit forgetting most of what they originally wrote!. Statistically, none of this is surprising. According to broad national surveys, teachers report spending roughly 12 hours per week on assessment tasks alone. Fatigue is not a metaphor; it is a measurable drag on instructional quality.

Fragmented Tool Adoption Across the District & Departments

Districts often invest in writing assessment tools for K–12 education, only to watch them sputter in uneven implementation. One department loves a particular platform; another rejects it because the interface feels foreign to their discipline. Soon enough, the school becomes a patchwork of tools, logins, rubrics, and teacher expectations. This balkanization undermines something administrators desperately need: cohesion. An “all-in-one” tool sounds promising—until it fails to support writing across content areas. Schools end up with data silos instead of school-wide insight.

Furthermore, for secondary students (especially those in middle school) that live inside short cognitive windows each year, If feedback lingers too long in the queue, the moment for reflection fades. Writing improves most when guidance is specific, immediate, and directly tied to the student’s thinking process—not when it arrives weeks later like a bureaucratic summons. Some districts found a direct correlation between slow feedback cycles and declining benchmark writing performance. The takeaway here is obvious: speed counts, and without technological support, speed is precisely where teachers are attenuated today.

For administrators, the ripple effect grows into a broader concern: misaligned tools, inconsistent feedback standards, and muddy data. When departments drift in separate digital directions, the district loses visibility into writing instruction as a whole. This raises the question many district leaders now ask: Is there a platform capable of bridging subjects, accelerating feedback, and supporting district-wide coherence without flattening teacher autonomy? Essay Eye steps directly into that gap.

How Essay Eye Enhances School-Wide Efficiency

Designed as more than automated essay grading software for schools, Essay Eye functions as a multi-disciplinary writing assessment companion. Its architecture rests on three pillars—efficiency, consistency, adaptability—each aligned with what K–12 systems actually need, not what vendors assume they need.

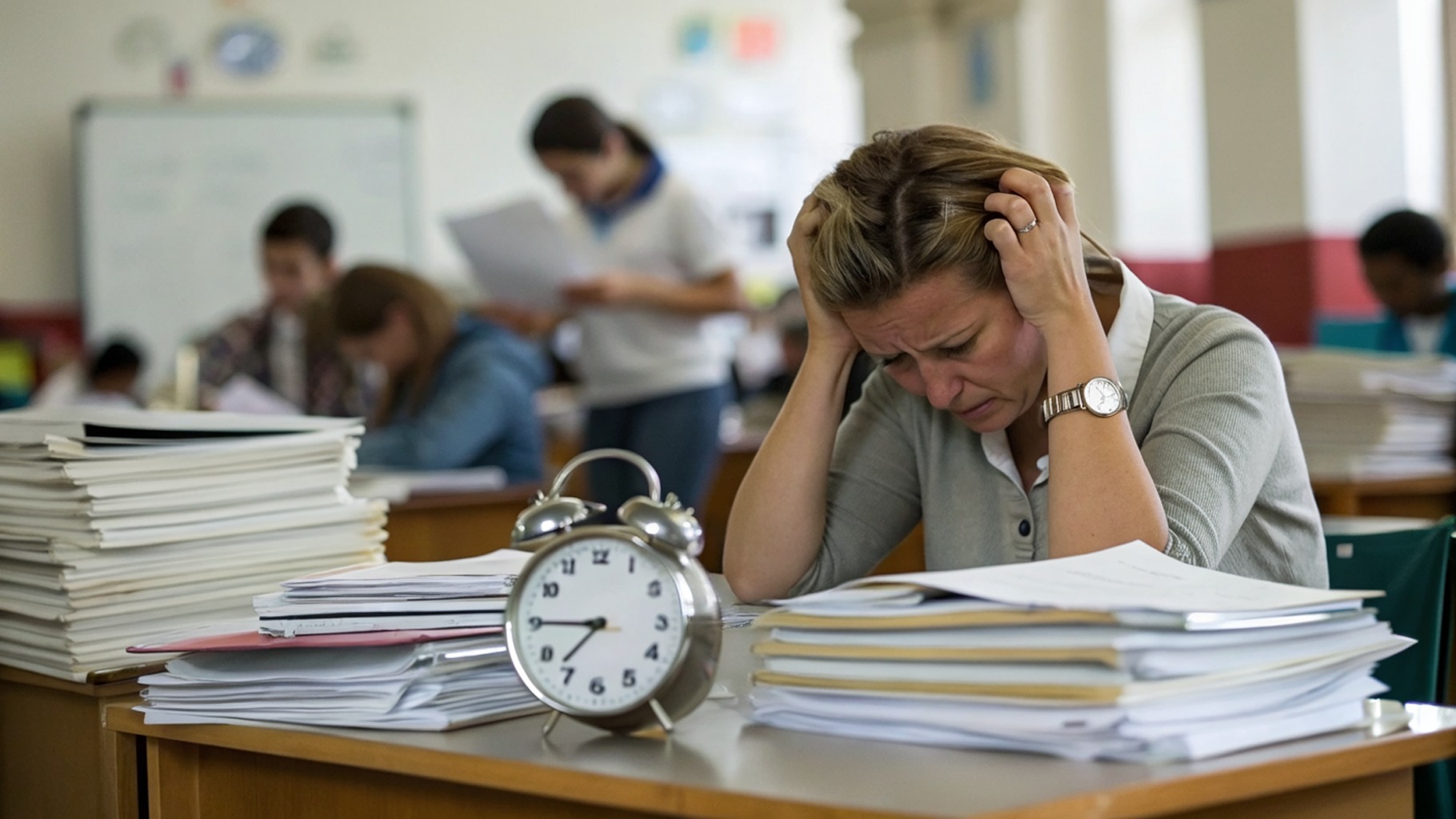

Efficiency Across ELA, History, and STEM

In English language arts, the platform evaluates thesis clarity, textual evidence, and overall coherence. Teachers receive draft-level analytics instantly—something impossible under traditional grading timelines. In history and social science, Essay Eye serves as a writing feedback automation tool, examining argumentation, evidence integration, and source reasoning. Its automated checks handle the mechanical aspects, freeing teachers to address the depth and historical nuance students often struggle with. And finally, in STEM writing (lab reports, explanatory essays, research summaries) the platform highlights reasoning gaps, unclear explanations, and misused technical language. The system’s precision supports literacy in scientific reasoning, not just correctness. In short all departments become beneficiaries when schools and districts opt to adopt this writing assessment tool for K–12 classrooms.

Consistency Supported by Standards Alignment

In district-wide implementations, the most persistent complaint is inconsistency. Teachers grade with different rubrics, tones, and levels of rigor. Essay Eye counters this fragmentation by aligning its feedback with state standards, Common Core expectations, and disciplinary norms. The result? Staff members will now enjoy shared language, comparable expectations, and writing-assessment dashboards that reveal clear, reliable patterns rather than contradictory anecdotes.

Minimal Training, Maximum Adoption

A 2024 pilot in a California district revealed something administrators rarely experience: teachers adopted the tool with almost no friction. Within two sessions and roughly 30 minutes of orientation, teachers were evaluating essays, syncing with Google Classroom, and using the LMS integration tools without external coaching.

- High adoption is not a mystery; it’s the natural outcome of simplicity.

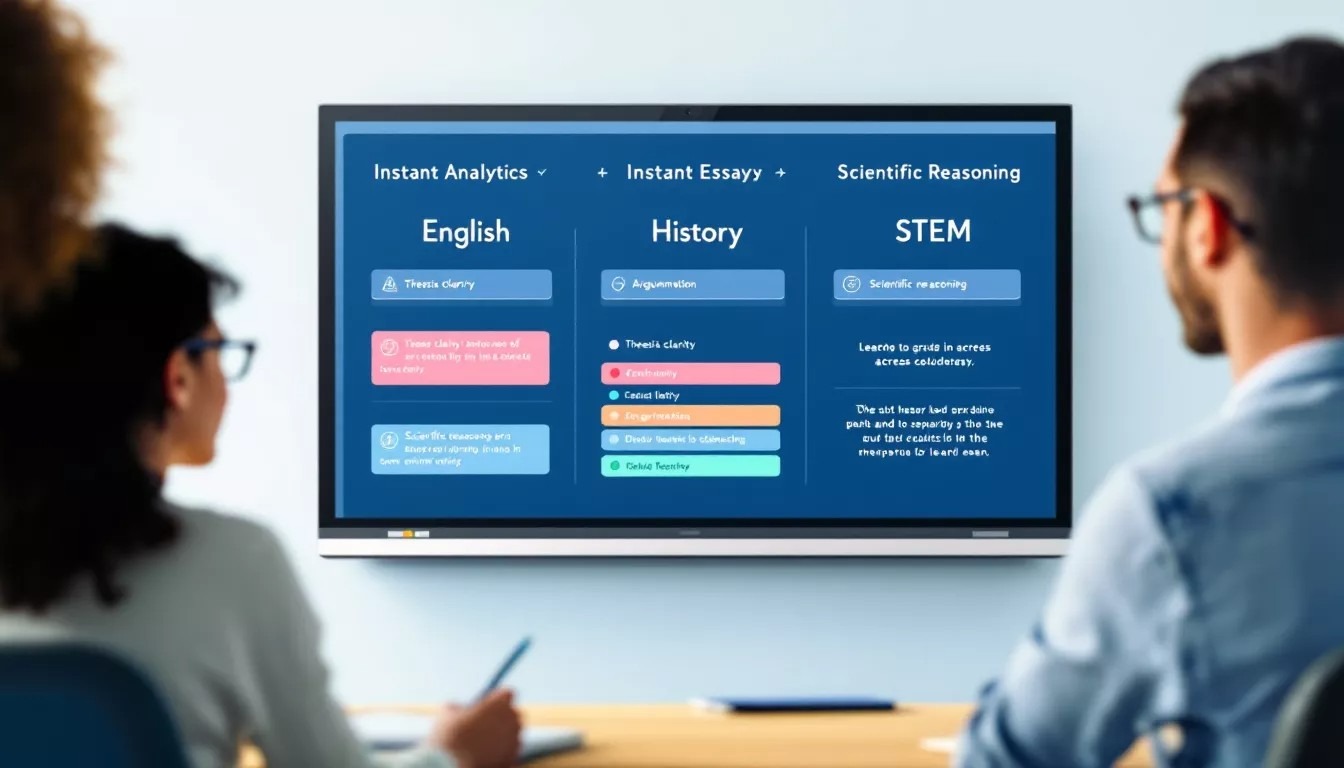

Automating the Tedious, Preserving the Human

As part one of this blog nears the end there may exist the fear that AI will replace teaching. This is understandable, but misplaced. Essay Eye’s design philosophy is augmentative, not antagonistic. The system handles mechanical corrections (syntax, grammar, citation alerts) so teachers can expand their attention toward developmental writing guidance. It is also designed to address pre-loaded rubrics aligned to state standards of organization and content. One veteran educator put it plainly: “The AI doesn’t replace my judgment; it sharpens the places where I don’t have enough time to look.” This reallocation of attention strengthens the teacher–student relationship. Feedback becomes generative rather than punitive. Students experience writing as a process rather than a verdict.

Essay Eye is fiercely dedicated to a Human-Centered Approach to AI in Education. There is a paradox at the heart of Essay Eye: the tool is algorithmic, yet deeply human in its purpose. It does not assign scores and walk away; it identifies opportunities for growth.Many educators enter cautiously, asking if an algorithm can detect nuance. The surprising answer, borne out in practice, is yes – particularly in structural clarity, coherence, and logical reasoning. What teachers often underestimate is how much time these “basic assessments” consume when teachers are providing students with the feedback they need to be successful. Once early drafts become intelligible, teachers are liberated to focus on creativity, argumentation, synthesis—the distinctly human components of writing.

Bridging the Feedback Gap

Timeliness determines whether feedback transforms writing or merely documents its flaws. Essay Eye accelerates this cycle by supplying teachers with a scaffold: preliminary comments, patterns of strengths and weaknesses, rubric maps aligned with district expectations. One teacher described the transformation succinctly: “My students finally understand that writing evolves—they revise because the system nudges them to revise.”That mindset shift (from finished product to living draft) may be the most important improvement any district technology solution for writing assessment can deliver.

A Multi-Disciplinary AI Partner in Modern Schools

Most automated essay grading systems for districts fail for a predictable reason: they were built with a single department in mind. Essay Eye’s strength lies in its breadth.

ELA Integration

Feedback aligns with AP and Common Core criteria. Suggestions move beyond grammar, addressing reasoning, evidence weaving, argument strength—everything that distinguishes advanced writing.

History Integration

Historical reasoning becomes visible. Essay Eye surfaces gaps in causation, context, and evidence sourcing—areas students often struggle to articulate.

STEM Integration

Scientific writing gains clarity. Teachers see patterns in students’ explanatory weaknesses, allowing targeted intervention.

This cross-disciplinary model is what sets Essay Eye apart from even the best AI essay grading platforms for schools: it sees writing as a district-wide skill, not a departmental quirk.

The Data Behind the Shift

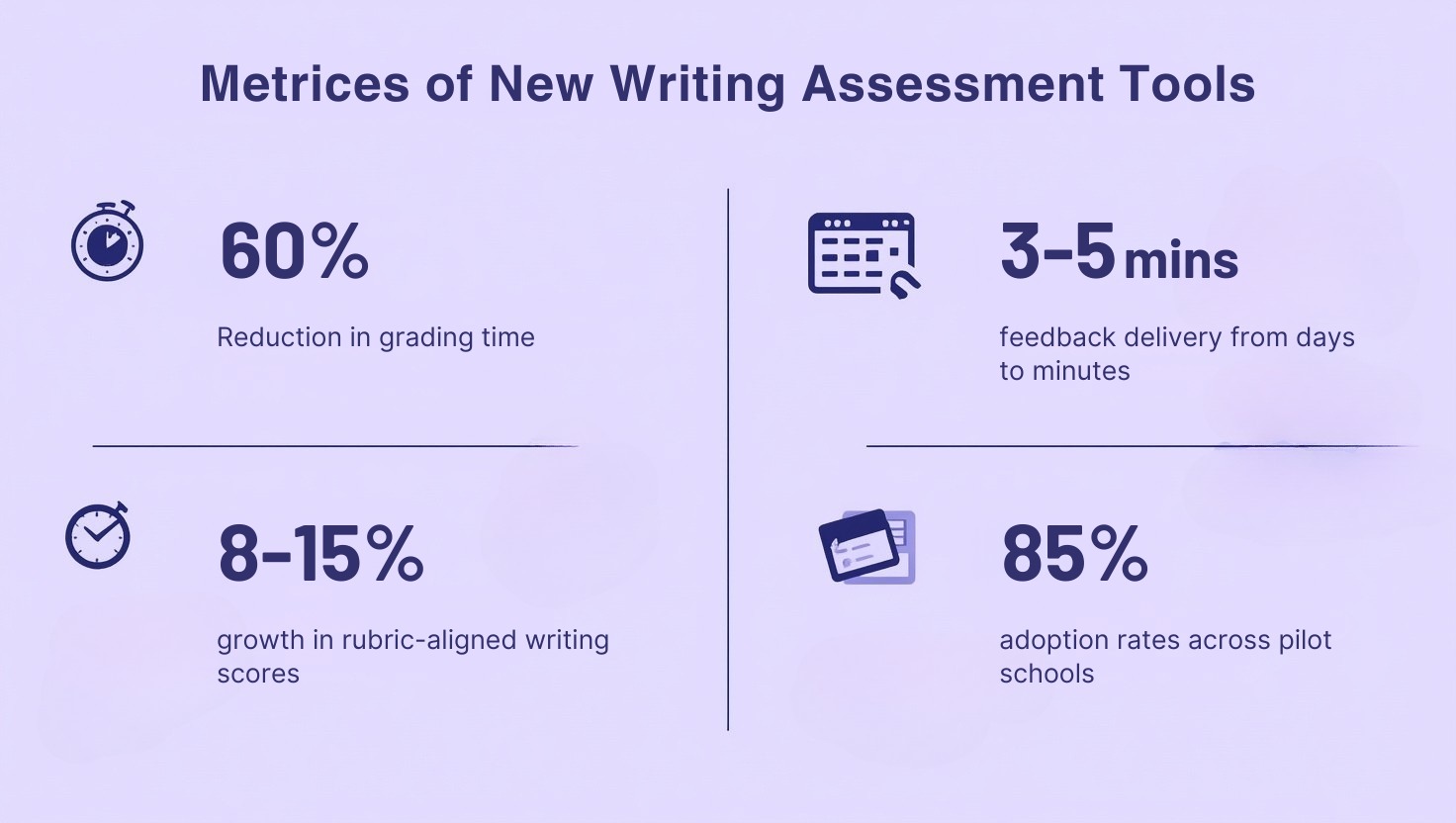

Administrators demand measurable outcomes, not marketing gloss. Early implementations point toward several recurring metrics:

- Up to 60% reduction in grading time

- Feedback delivery shrinking from days to minutes

- 8–15% growth in rubric-aligned writing scores within a semester

- Adoption rates above 85% across pilot schools

These data points offer more than efficiency—they signal cultural change. Teachers begin to view feedback as collaborative; students begin to view revision as natural.