In today’s rapidly evolving educational landscape, the integration of artificial intelligence (AI) into classrooms is no longer a novelty—it is a necessity. With rising class sizes, increased demands on teacher time, and the need for personalized learning, AI tools like Essay Eye — an advanced AI-powered essay grader — are poised to support educators in meaningful and measurable ways. However, a growing chorus of critics continues to raise concerns about the so-called “black box” problem associated with large language models (LLMs), citing issues like hallucinations, opacity, and unpredictability. While these concerns are not entirely without merit, they are often outdated or misapplied—especially when evaluating the safety and efficacy of classroom-specific AI solutions like Essay Eye.

This essay will address the most common criticisms of AI’s “black box” nature. It will explain recent advances in AI interpretability. It will also assure educators that Essay Eye is a transparent and trustworthy essay grader designed to enhance teaching, not replace it. In doing so, we’ll cover critical SEO keywords such as AI in education, AI essay grading, essay grader, educational technology safety, AI-powered feedback for students, and responsible AI use in classrooms.

Understanding the “Black Box” Concern

The term “black box” refers to any system where the internal mechanisms are not visible or easily understood by the user. In the context of large language models like GPT-4 or Claude 3, critics argue that the model’s outputs are generated through processes too complex or opaque to be trusted—especially in sensitive domains like education. These critics worry that teachers can’t verify how a grade was assigned or whether a piece of feedback was accurate, unbiased, or contextually appropriate. In short: if we don’t fully understand how an AI arrives at a decision, how can we trust it?

This concern stems from the fact that LLMs are not built like traditional software. They aren’t programmed line-by-line with explicit instructions. Instead, they are trained on vast datasets and form probabilistic associations between words, phrases, and concepts. This learning process results in a powerful but nonlinear decision-making architecture—more like a neural web than a straight line of code.

The worry is real: LLMs have been known to “hallucinate” facts, invent citations, and generate outputs that may be factually wrong or subtly biased. But equating these general limitations with how responsibly integrated AI tools like Essay Eye’s essay grader operate is misleading. In fact, it does a disservice to educators who stand to benefit greatly from AI-powered classroom solutions—if they are built the right way.

Not All AI Tools Are the Same

Lumping all AI applications into a single basket is intellectually lazy and practically harmful. Essay Eye, for instance, is not a generic chatbot or an open-ended LLM interface like ChatGPT. It is a purpose-built, classroom-aligned, curriculum-sensitive essay grader that evaluates student writing against clearly defined rubrics. The Essay Eye platform operates within constrained parameters and offers transparency that generic LLM tools do not.

Unlike open-ended models that can veer off-topic or invent data, Essay Eye is trained to prioritize rubric-based grading, evidence-based feedback, and grade-level alignment. It integrates with classroom tools such as Google Docs and Google Classroom to create a seamless grading pipeline that mirrors traditional teacher expectations while accelerating the feedback cycle.

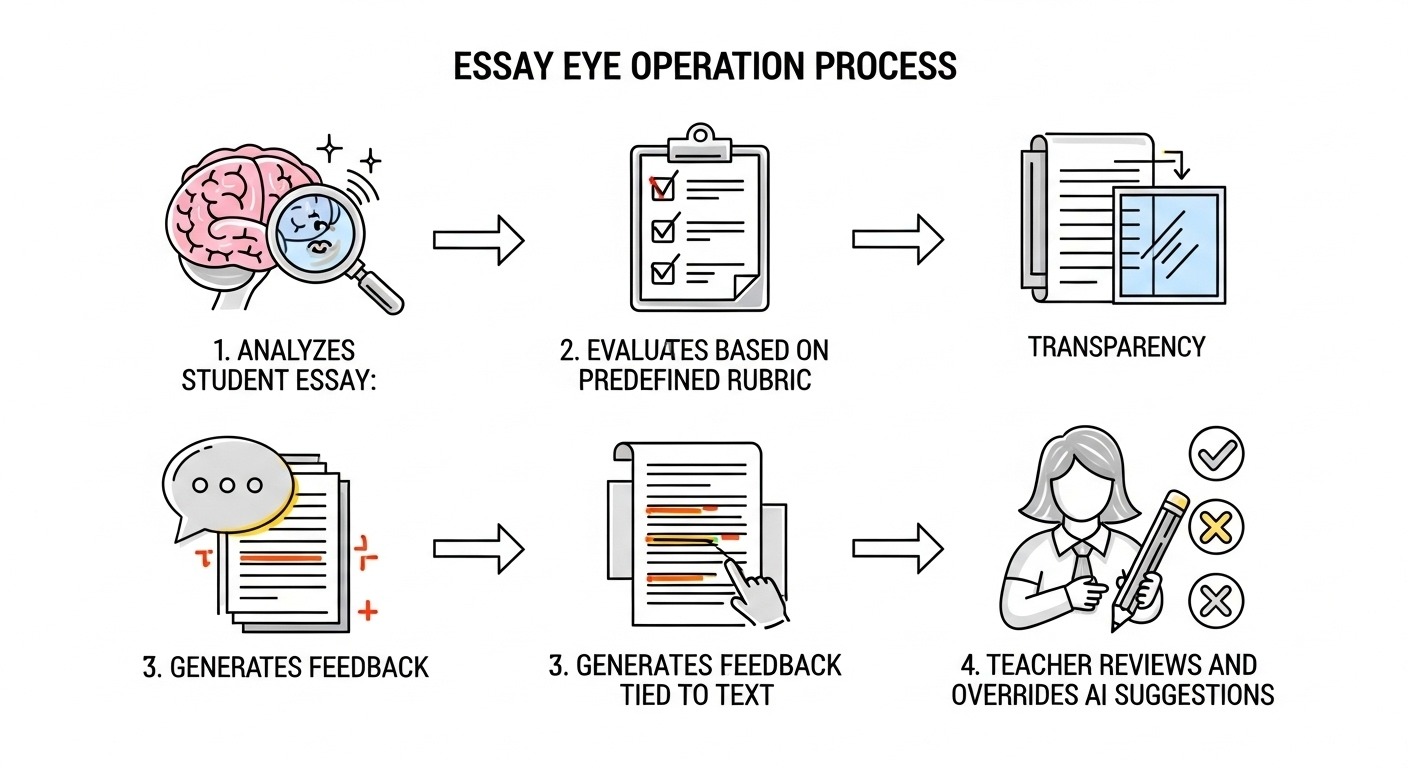

Here’s how Essay Eye addresses the “black box” concern head-on:

Transparent Evaluation Criteria: Teachers can view exactly which rubric items are being evaluated, what feedback is being given, and how scores are calculated.

Feedback Anchored in Student Text: Every comment, suggestion, and score is tied directly to the student’s writing. The feedback is traceable.

Human-in-the-Loop Control: Teachers have full authority to edit, override, or reject any AI-generated output. The final grade always rests with the educator.

This is not a mysterious algorithm making decisions in the dark. This is AI as a teaching assistant—working under the teacher’s guidance.

Recent Advances in AI Interpretability

As highlighted in the recent publications from Anthropic (“Scaling Monosemanticity”) and OpenAI (“Extracting Concepts from GPT-4”), the industry is making major progress in mechanistic interpretability—the science of understanding how AI models actually “think.”

This research has shown that LLMs can be broken down into understandable “features” that correspond to human-recognizable concepts. Researchers are now able to isolate specific patterns of neural activation tied to certain ideas, themes, and behaviors. These features can be monitored, adjusted, and even “steered” to ensure models behave in predictable and safe ways.

What does this mean for education?

We are rapidly moving from “black box” to “glass box” systems—where we can not only observe what the model is doing but also why it’s doing it.

Developers can fine-tune LLMs to emphasize safety, accuracy, and fairness—especially in high-stakes areas like student evaluation.

Tools like Essay Eye’s essay grader can be programmed to suppress undesirable features (e.g., bias, inappropriate tone) while enhancing desirable ones (e.g., clarity, alignment to standards).

This level of control and transparency is already being integrated into Essay Eye’s core architecture, ensuring that the model operates within clearly defined pedagogical boundaries.

AI Hallucinations: A Solved Problem?

While hallucinations remain a challenge in general-purpose AI models, they are largely mitigated in constrained environments like Essay Eye’s essay grader. The platform is not generating creative fiction or speculative answers—it is analyzing student text and evaluating it against a static rubric. This is not an open-ended task with infinite outputs. It is a structured, bounded, and predictable process.

Moreover, Essay Eye is continually monitored and updated through both automated testing and educator feedback loops. When issues are detected—whether it’s a misaligned comment or an unclear score—they are logged, reviewed, and corrected. This feedback loop improves model reliability over time, much like a human teacher learns from experience.

To put it plainly: Essay Eye’s essay grader is not hallucinating wild facts or speculative ideas. It’s doing exactly what a good teacher does—reading a student’s essay, identifying strengths and weaknesses, and offering constructive, rubric-aligned feedback.

Enhancing, Not Replacing, the Teacher

One of the most persistent misconceptions about educational AI tools is that they aim to replace teachers. Essay Eye takes the opposite approach: it empowers teachers.

Here’s what Essay Eye’s essay grader does:

Grades essays in seconds, freeing up teacher time.

Provides detailed, actionable feedback students can actually use.

Aligns feedback with curriculum standards and grade-level expectations.

Surfaces common student issues (e.g., weak thesis statements, lack of evidence) across a class, helping teachers target instruction.

Here’s what Essay Eye does not do:

Make final grading decisions without teacher review.

Operate in a pedagogical vacuum.

Replace lesson planning, mentorship, or classroom management.

This is AI for teachers—not instead of teachers.

Real-World Safety: What Educators Need to Know

Incorporating Essay Eye into a school setting means adhering to the highest standards of educational technology safety and student data privacy. We take FERPA and COPPA compliance seriously. No student data is stored beyond what is necessary for evaluation, and all integrations with platforms like Google Workspace for Education follow secure, encrypted protocols.

In addition, Essay Eye has built-in safeguards to ensure responsible AI use:

Bias mitigation tools to reduce the influence of language style, dialect, or sociolect.

Editable output fields for teacher review and override.

Audit trails for every AI-generated comment or score, so nothing is hidden.

Opt-in AI explanations, where teachers can ask, “Why did the AI assign this grade?” and get an answer in plain English.

Educational Benefits of AI Grading Tools

Let’s not forget what we stand to gain. By resisting outdated fears about AI’s “black box,” we risk denying students and teachers access to tools that can meaningfully improve learning.

Here are just a few benefits of using Essay Eye’s essay grader in the classroom:

Faster Feedback: Students receive formative feedback within minutes, enabling rapid revision and learning.

Personalized Instruction: Teachers can identify individual and group trends to inform instruction.

Equity and Consistency: AI ensures that grading is consistent, objective, and aligned to the rubric—reducing unconscious bias.

Teacher Wellbeing: Offloading repetitive tasks like first-draft grading allows teachers to focus on higher-order instruction and student relationships.

Toward a Future of Trustworthy AI in Education

The AI landscape is evolving rapidly, and so must our thinking. The “black box” metaphor was once a valid warning. But it no longer describes how advanced and responsible AI tools like Essay Eye’s essay grader work. With better interpretability, strong safety controls, human-in-the-loop oversight, and clear pedagogical design, we are building a future where AI supports learning while staying transparent and trustworthy.